|

By Steffen Hoellinger

From automating routine tasks to providing real-time insights to inform complex decisions, AI agents and copilots are poised to become an integral part of enterprise operations. At least that’s true for the organizations that can figure out how to supply large language models (LLMs) with real-time, contextualized, and trustworthy data in a secure and scalable way.

|

By Sean Falconer

This article was originally published on InfoWorld on Jan. 28, 2025 While large language models (LLMs) are useful, their real power emerges when they can act on insights, automating a broader range of problems. Reasoning agents have a long history in artificial intelligence (AI) research—they refer to a piece of software that can generalize what it has previously seen to apply in situations it hasn’t seen before.

|

By Kai Waehner

As real-time data processing becomes a cornerstone of modern applications, the ability to integrate machine learning model inference with Apache Flink offers developers a powerful tool for on-demand predictions in areas like fraud detection, customer personalization, predictive maintenance, and customer support. Flink enables developers to connect real-time data streams to external machine learning models through remote inference, where models are hosted on dedicated model servers and accessed via APIs.

|

By Suvig Sharma

In today’s data-driven world, the ability to turn raw data into actionable insights is no longer a nice to have—it’s a necessity to power exemplary citizen service. Singapore’s Smart Nation initiative is built on the idea that data, when utilized effectively, can transform public services and improve lives.

|

By Confluent

New partnership eliminates data silos for enterprises with Apache Kafka and Delta Lake.

|

By Suguna Ravanappa

With almost two years at Confluent under her belt, Suguna Ravanappa has taken impressive strides as a people manager. Her eight-person team of engineers in the Global Support organization helps customers tackle technical challenges in their data streaming environments. According to Suguna, leading this team and being part of Confluent’s unique company culture has helped her develop stronger skills as both a leader and a collaborator. Learn more about her experience.

|

By Braeden Quirante

Confluent and AWS Lambda can be used to build scalable and real-time event-driven architectures (EDAs) that respond to specific business events. Confluent provides a streaming SaaS solution based on Apache Kafka and built on Kora: The Cloud-Native Engine for Apache Kafka, allowing you to focus on building event-driven applications without operating the underlying infrastructure.

|

By Jay Kreps

We’re excited to announce an expanded partnership between Confluent and Databricks to dramatically simplify the integration between analytical and operational systems. This is particularly important as enterprises want to shorten the deployment time of AI and real-time data applications. This partnership enables those enterprises to spend less time fussing over siloed data and governance and more time creating value for their customers.

|

By Mike Wallace

Confluent has achieved FedRAMP Ready status for Confluent Cloud for Government, and Confluent is now listed on the FedRAMP Marketplace as a FedRAMP Ready vendor.

|

By Sean Falconer

I host two podcasts, Software Engineering Daily and Software Huddle, and often appear as a guest on other shows. Promoting episodes—whether I’m hosting or featured—helps highlight the great conversations I have, but finding the time to craft a thoughtful LinkedIn post for each one is tough. Between hosting, work, and life, sitting down to craft a thoughtful LinkedIn post for every episode just doesn’t always happen.

|

By Confluent

We’re diving even deeper into the fundamentals of data streaming to explore stream processing—what it is, the best tools and frameworks, and its real-world applications. Our guests, Anna McDonald, Distinguished Technical Voice of the Customer at Confluent, and Abhishek Walia, Staff Customer Success Technical Architect at Confluent, break down what stream processing is, how it differs from batch processing, and why tools like Flink are game changers.

|

By Confluent

How do you build systems that keep up with a nonstop flood of data? Anna McDonald, Distinguished Technical Voice of the Customer at Confluent, explains how.

|

By Confluent

Finding pain is key to starting your real-time data streaming journey, as Tim Berglund, VP of Developer Relations at Confluent, shares.

|

By Confluent

Power AI-driven decision-making with Confluent’s Data Streaming Platform and Databricks’ Data Intelligence Platform. Watch this step-by-step demo to learn how to build a real-time social media AI chatbot that instantly engages with customer posts, like product reviews. New to Confluent? Experience unified Apache Kafka and Apache Flink with a free trial.

|

By Confluent

Real-time data streaming is shaking up everything we know about modern data systems. If you’re ready to dive in but unsure where to begin, no worries. That’s why we’re here. Our first episode breaks down the basics of data streaming—from what it is, to its pivotal role in processing and transferring data in a fast-paced digital environment. Your guide is Tim Berglund, VP of Developer Relations at Confluent, where he and his team work to make data streaming data and its emerging toolset accessible to all developers.

|

By Confluent

Data streaming shifts the focus from storing data to acting on it in real-time—Tim Berglund, Confluent’s VP of Developer Relations, breaks down how.

|

By Confluent

Learn how Audacy, a leading multi-platform audio content company, transformed its operations with Confluent’s real-time data streaming platform, delivering personalized audio experiences to 200 million listeners. By moving from complex point-to-point systems to a seamless data streaming architecture, Audacy has streamlined operations and can now focus on delivering the personalized content, dynamic advertising, and data-driven insights they need to stay ahead in a demanding market.

|

By Confluent

Discover how Confluent’s Data Streaming Platform and Microsoft Azure are revolutionizing the retail industry. In this video, learn how real-time data streaming and AI-powered insights can: Explore how Confluent and Azure’s seamless integration enables retailers to break down data silos, streamline operations, and accelerate innovation with scalable, enterprise-ready solutions. Whether you’re looking to modernize your retail infrastructure, enhance customer engagement, or drive operational efficiency, this video is your guide to leveraging AI and real-time data streaming.

|

By Confluent

How do you bring real-time data to AI and machine learning models? Kai Waehner, Field CTO at Confluent, explains how to use Flink for real-time model inference to power use cases for generative AI, real-time analytics, predictive maintenance, and more.

|

By Confluent

In this episode of the Google Cloud Marketplace Marvels series, theCUBE Research’s John Furrier talks to Shaun Clowes, CPO of Confluent, and Stephen Orban, VP of migrations, ISVs, and marketplace, Google Cloud at Google. Clowes highlights the strategic partnership with Google Cloud, emphasizing seamless integration, scalable solutions and flexibility in marketplace consumption models. Together, they explore the platform’s impact on AI initiatives, enterprise analytics and rapid IT modernization, demonstrating how businesses can build next-generation data infrastructures one step at a time.

|

By Confluent

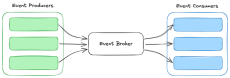

Traditional messaging middleware like Message Queues (MQs), Enterprise Service Buses (ESBs), and Extract, Transform and Load (ETL) tools have been widely used for decades to handle message distribution and inter-service communication across distributed applications. However, they can no longer keep up with the needs of modern applications across hybrid and multi cloud environments for asynchronicity, heterogeneous datasets and high volume throughput.

|

By Confluent

Why a data mesh? Predicated on delivering data as a first-class product, data mesh focuses on making it easy to publish and access important data across your organization. An event-driven data mesh combines the scale and performance of data in motion with product-focused rigor and self-service capabilities, putting data at the front and center of both operational and analytical use-cases.

|

By Confluent

When it comes to fraud detection in financial services, streaming data with Confluent enables you to build the right intelligence-as early as possible-for precise and predictive responses. Learn how Confluent's event-driven architecture and streaming pipelines deliver a continuous flow of data, aggregated from wherever it resides in your enterprise, to whichever application or team needs to see it. Enrich each interaction, each transaction, and each anomaly with real-time context so your fraud detection systems have the intelligence to get ahead.

|

By Confluent

Many forces affect software today: larger datasets, geographical disparities, complex company structures, and the growing need to be fast and nimble in the face of change. Proven approaches such as service-oriented (SOA) and event-driven architectures (EDA) are joined by newer techniques such as microservices, reactive architectures, DevOps, and stream processing. Many of these patterns are successful by themselves, but as this practical ebook demonstrates, they provide a more holistic and compelling approach when applied together.

|

By Confluent

Data pipelines do much of the heavy lifting in organizations for integrating, transforming, and preparing data for subsequent use in data warehouses for analytical use cases. Despite being critical to the data value stream, data pipelines fundamentally haven't evolved in the last few decades. These legacy pipelines are holding organizations back from really getting value out of their data as real-time streaming becomes essential.

|

By Confluent

In today's fast-paced business world, relying on outdated data can prove to be an expensive mistake. To maintain a competitive edge, it's crucial to have accurate real-time data that reflects the status quo of your business processes. With real-time data streaming, you can make informed decisions and drive value at a moment's notice. So, why would you settle for being simply data-driven when you can take your business to the next level with real-time data insights??

|

By Confluent

Data pipelines do much of the heavy lifting in organizations for integrating and transforming and preparing the data for subsequent use in downstream systems for operational use cases. Despite being critical to the data value stream, data pipelines fundamentally haven't evolved in the last few decades. These legacy pipelines are holding organizations back from really getting value out of their data as real-time streaming becomes essential.

|

By Confluent

Shoe retail titan NewLimits relies on a jumble of homegrown ETL pipelines and batch-based data systems. As a result, sluggish and inefficient data transfers are frustrating internal teams and holding back the company's development velocity and data quality.

- February 2025 (20)

- January 2025 (14)

- December 2024 (24)

- November 2024 (10)

- October 2024 (24)

- September 2024 (27)

- August 2024 (15)

- July 2024 (9)

- June 2024 (22)

- May 2024 (18)

- April 2024 (7)

- March 2024 (18)

- February 2024 (13)

- January 2024 (6)

- December 2023 (9)

- November 2023 (10)

- October 2023 (14)

- September 2023 (28)

- August 2023 (8)

- July 2023 (2)

Connect and process all of your data in real time with a cloud-native and complete data streaming platform available everywhere you need it.

Data streaming enables businesses to continuously process their data in real time for improved workflows, more automation, and superior, digital customer experiences. Confluent helps you operationalize and scale all your data streaming projects so you never lose focus on your core business.

Confluent Is So Much More Than Kafka:

- Cloud Native: 10x Apache Kafka® service powered by the Kora Engine.

- Complete: A complete, enterprise-grade data streaming platform.

- Everywhere: Availability everywhere your data and applications reside.

Apache Kafka® Reinvented for the Data Streaming Era