Defining Asynchronous Microservice APIs for Fraud Detection | Designing Event-Driven Microservices

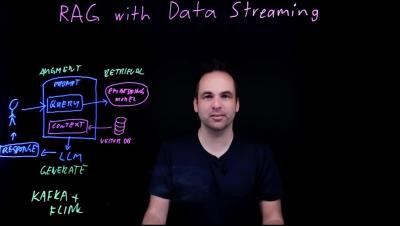

In this video, Wade explores the process of decomposing a monolith into a series of microservices. You'll see how Tributary bank extracts a variety of API methods from an existing monolith. Tributary Bank wants to decompose its monolith into a series of microservices. They are going to start with their Fraud Detection service. However, before they can start, they first have to untangle the existing code. They will need to define a clean API that will allow them to move the functionality to an asynchronous, event-driven microservice.