Systems | Development | Analytics | API | Testing

ClearML

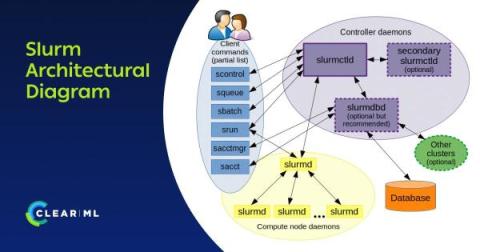

How ClearML Helps Teams Get More out of Slurm

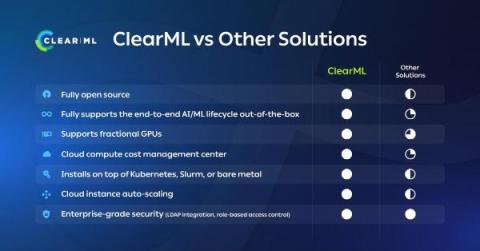

ClearML Supports Seamless Orchestration and Infrastructure Management for Kubernetes, Slurm, PBS, and Bare Metal

Why RAG Has a Place in Your LLMOps

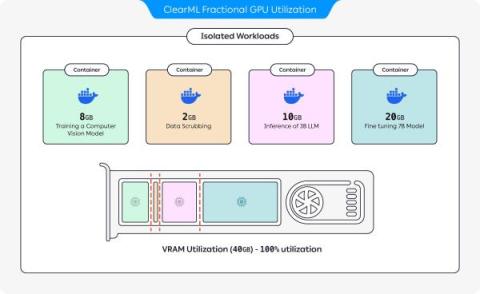

Open Source Fractional GPUs for Everyone, Now Available from ClearML

The State of AI Infrastructure at Scale 2024

Easily Train, Manage, and Deploy Your AI Models With Scalable and Optimized Access to Your Company's AI Compute. Anywhere.

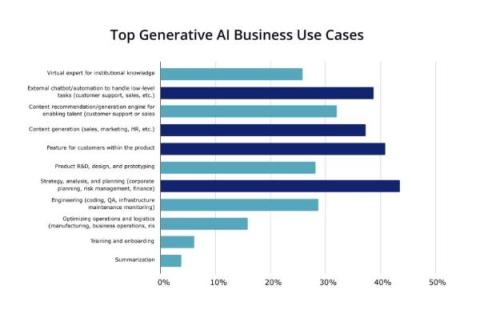

Establishing A Framework For Effective Adoption and Deployment of Generative AI Within Your Organization

Adopting and deploying Generative AI within your organization is pivotal to driving innovation and outsmarting the competition while at the same time, creating efficiency, productivity, and sustainable growth. Acknowledging that AI adoption is not a one-size-fits-all process, each organization will have its unique set of use cases, challenges, objectives, and resources.

Using ClearML and MONAI for Deep Learning in Healthcare

This tutorial shows how to use ClearML to manage MONAI experiments. Originating from a project co-founded by NVIDIA, MONAI stands for Medical Open Network for AI. It is a domain-specific open-source PyTorch-based framework for deep learning in healthcare imaging. This blog shares how to use the ClearML handlers in conjunction with the MONAI Toolkit. To view our code example, visit our GitHub page.

It's Midnight. Do You Know Which AI/ML Uses Cases Are Producing ROI?

In one of our recent blog posts, about six key predictions for Enterprise AI in 2024, we noted that while businesses will know which use cases they want to test, they likely won’t know which ones will deliver ROI against their AI and ML investments. That’s problematic, because in our first survey this year, we found that 57% of respondents’ boards expect a double-digit increase in revenue from AI/ML investments in the coming fiscal year, while 37% expect a single-digit increase.