Systems | Development | Analytics | API | Testing

Kafka

Making Flink Serverless, With Queries for Less Than a Penny

Imagine easily enriching data streams and building stream processing applications in the cloud, without worrying about capacity planning, infrastructure and runtime upgrades, or performance monitoring. That's where our serverless Apache Flink® service comes in, as announced at this year’s Current | The Next Generation of Kafka Summit.

What is Confluent?

Enterprise Apache Kafka Cluster Strategies: Insights and Best Practices

Apache Kafka® has become the de-facto standard for streaming data, helping companies deliver exceptional customer experiences, automate operations, and become software. As companies increase their use of real-time data, we have seen the proliferation of Kafka clusters within many enterprises. Often, siloed application and infrastructure teams set up and manage new clusters to solve new use cases as they arise.

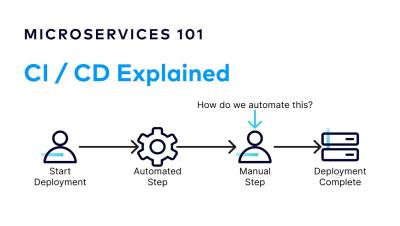

Asynchronous Events | Microservices 101

Introducing Data Portal in Stream Governance

Today, we’re excited to announce the general availability of Data Portal on Confluent Cloud. Data Portal is built on top of Stream Governance, the industry’s only fully managed data governance suite for Apache Kafka® and data streaming. The developer-friendly, self-service UI provides an easy and curated way to find, understand, and enrich all of your data streams, enabling users across your organization to build and launch streaming applications faster.

4 reasons to integrate Apache Kafka and Amazon S3

Amazon S3 is a standout storage service known for its ease of use, power, and affordability. When combined with Apache Kafka, a popular streaming platform, it can significantly reduce costs and enhance service levels. In this post, we’ll explore various ways S3 is put to work in streaming data platforms.

How To Use Streaming Joins with Apache Flink

A Deep Dive Into Sending With librdkafka

In a previous blog post (How To Survive an Apache Kafka® Outage) I outlined the effects on applications during partial or total Kafka cluster outages and proposed some architectural strategies to handle these types of service interruptions. The applications most heavily impacted by this type of outage are external interfaces that receive data, do not control request flow, and possibly perform some form of business transaction with the outside world before producing to Kafka.