Systems | Development | Analytics | API | Testing

Kafka

Build real-time apps with Streaming SQL

Introducing the Apache Kafka App Catalog

Working with Apache Kafka and real-time applications comes with challenges. Visibility into the deployed applications and their dependency on what we call the “data fabric” is one of them (For the sake of this blog, it means Kafka and all its state and configuration). If you’ve built a multi-tenant real-time data platform with Kafka, where teams are deploying applications outside your jurisdiction, this is where the pain is particularly acute. It goes something like this.

The best of Kafka Summit 2020

After a self-isolated and event-free spring, some of us around the world welcomed a more promising summer. You might be taking some time away on a socially distanced holiday. You might be taking some time away from the day-to-day at home. But if a cold beer in the sun isn't enough to make up for these difficult months, the premier event for the Streaming Data Community is back! Kafka Summit has gone virtual this year and that means you can attend the event from anywhere.

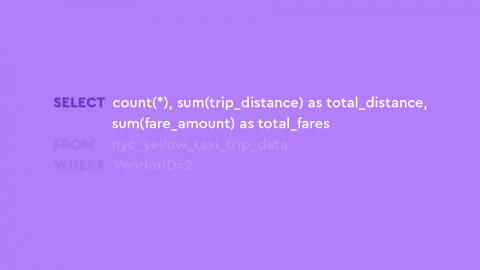

Navigating & Querying Apache Kafka with SQL

Why our new Streaming SQL opens up your data platform

SQL has long been the universal language for working with data. In fact it’s more relevant today than it was 40 years ago. Many data technologies were born without it and inevitably ended up adopting it later on. Apache Kafka is one of these data technologies. At Lenses.io, we were the first in the market to develop a SQL layer for Kafka (yes, before KSQL) and integrate it in a few different areas of our product for different workloads.

Why SQL is your key to querying Kafka

If you’re an engineer exploring a streaming platform like Kafka, chances are you’ve spent some time trying to work out what’s going on with the data in there. But if you’re introducing Kafka to a team of data scientists or developers unfamiliar with its idiosyncrasies, you might have spent days, weeks, months trying to tack on self-service capabilities. We’ve been there.

Data dump to data catalog for Apache Kafka

From data stagnating in warehouses to a growing number of real-time applications, in this article we explain why we need a new class of Data Catalogs: this time for real-time data. The 2010s brought us organizations “doing big data”. Teams were encouraged to dump it into a data lake and leave it for others to harvest. But data lakes soon became data swamps.

It Takes Two to Kafka: AWS MSK + DataOps

I ordered a ride share recently from a beach; the app struggled to find a car, so I had to make several requests. After the fourth or fifth attempt, my bank alerted me to possible fraudulent activity on my credit card via SMS. Each time I ordered a ride, the service put a pending charge on my card. After I texted back that it was just me, the bank reactivated my account. Though the process was annoying, I felt reassured my bank could detect possible fraudulence that quickly.

Will your streaming data platform disturb your holiday?

Here's why you need to double down on your DataOps before your vacation. In the past few months, everything has changed at work (or at home). Q1 plans were scrapped. Reset buttons were smashed. It was all about cost-cutting and keeping lights on. Many app and data teams sought quick solutions and developed workarounds to data challenges and operational problems as people prepared to work from home for the foreseeable future. And now, it’s time for a holiday.