Systems | Development | Analytics | API | Testing

Iguazio

The Complete Guide to Using the Iguazio Feature Store with Azure ML - Part 1

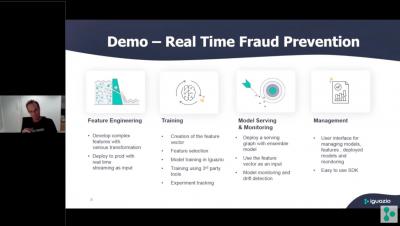

In this series of blog posts, we will showcase an end-to-end hybrid cloud ML workflow using the Iguazio MLOps Platform & Feature Store combined with Azure ML. This blog will be more of an overview of the solution and the types of problems it solves, while the next parts will be a technical deep dive into each step of the process.

Looking into 2022: Predictions for a New Year in MLOps

In an era where the passage of time seems to have changed somehow, it definitely feels strange to already be reflecting on another year gone by. It’s a cliche for a reason–the world definitely feels like it’s moving faster than ever, and in some completely unexpected directions. Sometimes it feels like we’re living in a time lapse when I consider the pace of technological progress I’ve witnessed in just a year.

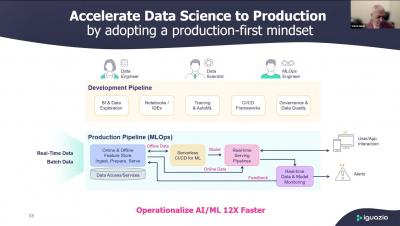

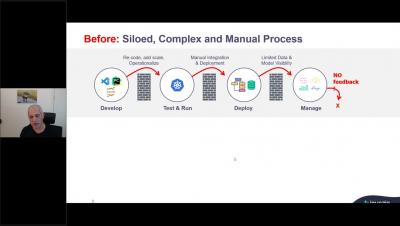

Adopting a Production-First Approach to Enterprise AI

After a year packed with one machine learning and data science event after another, it’s clear that there are a few different definitions of the term ‘MLOps’ floating around. One convention uses MLOps to mean the cycle of training an AI model: preparing the data, evaluating, and training the model. This iterative or interactive model often includes AutoML capabilities, and what happens outside the scope of the trained model is not included in this definition.

Scaling NLP Pipelines at IHS Markit - MLOps Live #17

Automating MLOps for Deep Learning

ODSC West: Building Operational Pipelines for Machine and Deep Learning

ODSC West AI Expo Talk: Real-Time Feature Engineering with a Feature Store

ODSC West MLOps Keynote: Scaling NLP Pipelines at IHS Markit

Introduction to TF Serving

Machine learning (ML) model serving refers to the series of steps that allow you to create a service out of a trained model that a system can then ping to receive a relevant prediction output for an end user. These steps typically involve required pre-processing of the input, a prediction request to the model, and relevant post-processing of the model output to apply business logic.