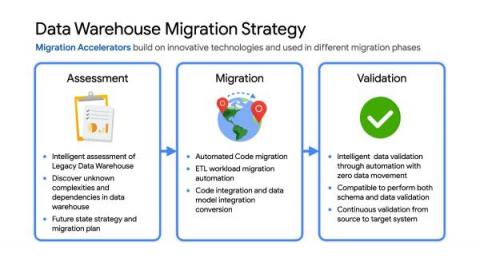

How to migrate an on-premises data warehouse to BigQuery on Google Cloud

Data teams across companies have continuous challenges of consolidating data, processing it and making it useful. They deal with challenges such as a mixture of multiple ETL jobs, long ETL windows capacity-bound on-premise data warehouses and ever-increasing demands from users. They also need to make sure that the downstream requirements of ML, reporting and analytics are met with the data processing.