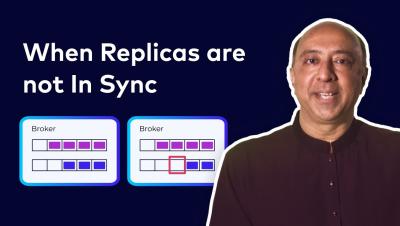

Replication in Apache Kafka Explained | Monitoring & Troubleshooting Data Streaming Applications

Learn how replication works in Apache Kafka. Deep dive into its critical aspects, including: Whether you're a systems architect, developer, or just curious about Kafka, this video provides valuable insights and hands-on examples. Don't forget to check out our GitHub repo to get all of the code used in the demo, and to contribute your own enhancements.