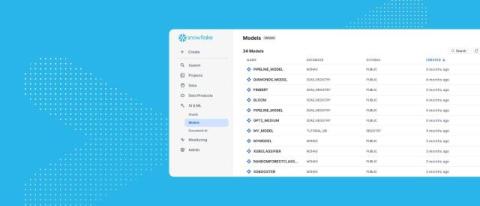

Snowflake ML Now Supports Expanded MLOps Capabilities for Streamlined Management of Features and Models

Bringing machine learning (ML) models into production is often hindered by fragmented MLOps processes that are difficult to scale with the underlying data. Many enterprises stitch together a complex mix of various MLOps tools to build an end-to-end ML pipeline. The friction of having to set up and manage separate environments for features and models creates operational complexity that can be costly to maintain and difficult to use.