Build limitless workloads on BigQuery: New features beyond SQL

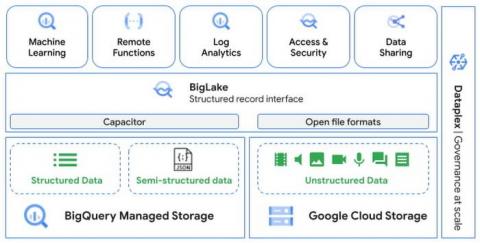

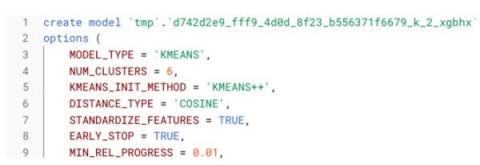

Our mission at Google Cloud is to help our customers fuel data driven transformations. As a step towards this, BigQuery is removing its limit as a SQL-only interface and providing new developer extensions for workloads that require programming beyond SQL. These flexible programming extensions are all offered without the limitations of running virtual servers.