Systems | Development | Analytics | API | Testing

Machine Learning

Introduction to TF Serving

Machine learning (ML) model serving refers to the series of steps that allow you to create a service out of a trained model that a system can then ping to receive a relevant prediction output for an end user. These steps typically involve required pre-processing of the input, a prediction request to the model, and relevant post-processing of the model output to apply business logic.

Make Your Models Matter: What It Takes to Maximize Business Value from Your Machine Learning Initiatives

We are excited by the endless possibilities of machine learning (ML). We recognise that experimentation is an important component of any enterprise machine learning practice. But, we also know that experimentation alone doesn’t yield business value. Organizations need to usher their ML models out of the lab (i.e., the proof-of-concept phase) and into deployment, which is otherwise known as being “in production”.

New Applied ML Prototypes Now Available in Cloudera Machine Learning

It’s no secret that Data Scientists have a difficult job. It feels like a lifetime ago that everyone was talking about data science as the sexiest job of the 21st century. Heck, it was so long ago that people were still meeting in person! Today, the sexy is starting to lose its shine. There’s recognition that it’s nearly impossible to find the unicorn data scientist that was the apple of every CEO’s eye in 2012.

Delivering Continuous Operation for Production ML

It Worked Fine in Jupyter. Now What?

You got through all the hurdles getting the data you need; you worked hard training that model, and you are confident it will work. You just need to run it with a more extensive data set, more memory and maybe GPUs. And then...well. Running your code at scale and in an environment other than yours can be a nightmare. You have probably experienced this or read about it in the ML community. How frustrating is that? All your hard work and nothing to show for it.

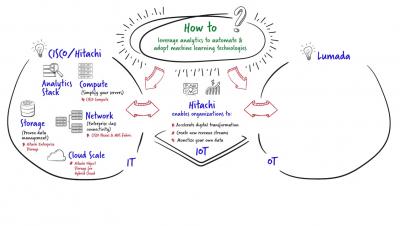

How to leverage analytics to automate & adopt machine learning technologies

The Ultimate Map to finding Halloween candy surplus

As Halloween night quickly approaches, there is only one question on every kid’s mind: how can I maximize my candy haul this year with the best possible candy? This kind of question lends itself perfectly to data science approaches that enable quick and intuitive analysis of data across multiple sources.

How to Bring Breakthrough Performance and Productivity To AI/ML Projects

By Jean-Baptiste Thomas, Pure Storage & Yaron Haviv, Co-Founder & CTO of Iguazio You trained and built models using interactive tools over data samples, and are now working on building an application around them to bring tangible value to the business. However, a year later, you find that you have spent an endless amount time and resources, but your application is still not fully operational, or isn’t performing as well as it did in the lab. Don’t worry, you are not alone.