Shift left to write data once, read as tables or streams

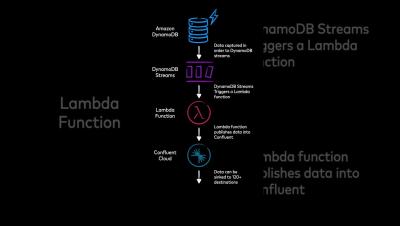

Shift Left is a rethink of how we circulate, share and manage data in our organizations using DataStreams, Change Data Capture, FlinkSQL and Tableflow. It addresses the challenges with multi-hop and medallion architectures using batch pipelines by shifting the data preparation, cleaning and schemas to the point where data is created and as a result, you can build fresh trustworthy datasets as streams for operational use cases or Apache Iceberg tables for analytical use cases.