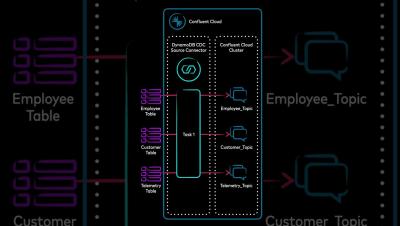

Connect with Confluent: Celebrating One Year and 50+ Integrations

In just 12 short months, the Connect with Confluent (CwC) technology partner program has transformed from a new, ambitious initiative to expand the data streaming ecosystem into a thriving portfolio that’s rapidly increasing the breadth and value of real-time data. The program now provides a portfolio of 50+ integrations, each one amplifying the capabilities of Confluent's unified data streaming platform for Apache Kafka and Apache Flink.