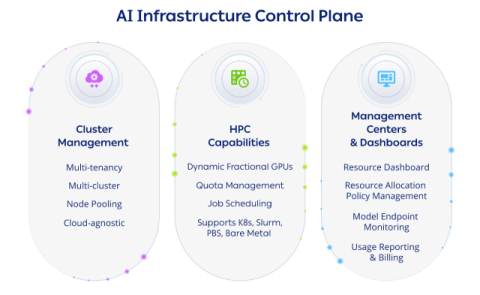

ClearML Announces AI Infrastructure Control Plane

We are excited to announce the launch of our AI Infrastructure Control Plane, designed as a universal operating system for AI infrastructure. With this launch, we make it easier for IT teams and DevOps to gain ultimate control over their AI Infrastructure, manage complex environments, maximize compute utilization, and deliver an optimized self-serve experience for their AI Builders.