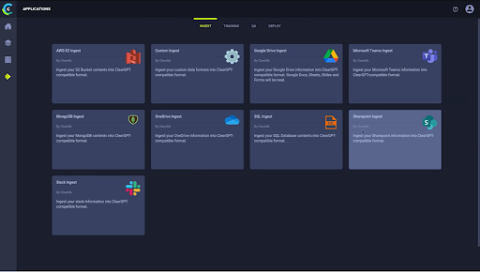

Systems | Development | Analytics | API | Testing

Latest News

It's Midnight. Do You Know Which AI/ML Uses Cases Are Producing ROI?

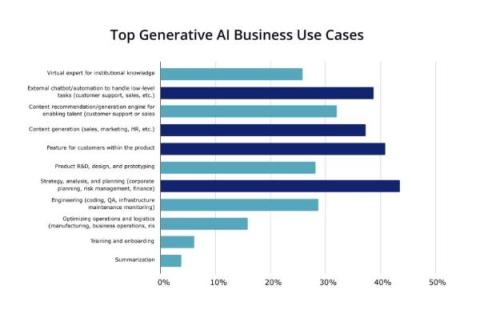

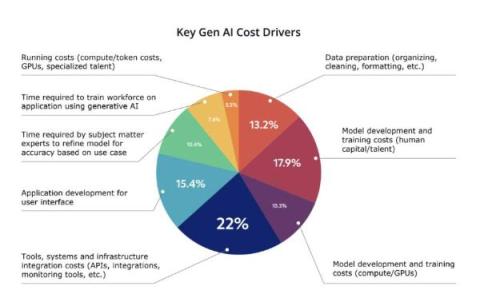

In one of our recent blog posts, about six key predictions for Enterprise AI in 2024, we noted that while businesses will know which use cases they want to test, they likely won’t know which ones will deliver ROI against their AI and ML investments. That’s problematic, because in our first survey this year, we found that 57% of respondents’ boards expect a double-digit increase in revenue from AI/ML investments in the coming fiscal year, while 37% expect a single-digit increase.

Scaling MLOps Infrastructure: Components and Considerations for Growth

How to Build Accurate and Scalable LLMs with ClearGPT

Large Language Models (LLMs) have now evolved to include capabilities that simplify and/or augment a wide range of jobs. As enterprises consider wide-scale adoption of LLMs for use cases across their workforce or within applications, it’s important to note that while foundation models provide logic and the ability to understand commands, they lack the core knowledge of the business. That’s where fine-tuning becomes a critical step.

How to Build a Smart GenAI Call Center App

Six Key Predictions for Artificial Intelligence in the Enterprise

As we head into 2024, AI continues to evolve at breakneck speed. The adoption of AI in large organizations is no longer a matter of “if,” but “how fast.” Companies have realized that harnessing the power of AI is not only a competitive advantage but also a necessity for staying relevant in today’s dynamic market. In this blog post, we’ll look at AI within the enterprise and outline six key predictions for the coming year.

Build and deploy ML with ease Using Snowpark ML, Snowflake Notebooks, and Snowflake Feature Store

Snowflake has invested heavily in extending the Data Cloud to AI/ML workloads, starting in 2021 with the introduction of Snowpark, the set of libraries and runtimes in Snowflake that securely deploy and process Python and other popular programming languages.

Harness the Power of Pinecone with Cloudera's New Applied Machine Learning Prototype

At Cloudera, we continuously strive to empower organizations to unlock the full potential of their data, catalyzing innovation and driving actionable insights. And so we are thrilled to introduce our latest applied ML prototype (AMP)—a large language model (LLM) chatbot customized with website data using Meta’s Llama2 LLM and Pinecone’s vector database.

Five ways Fivetran lays the foundation for machine learning

Data integration is essential for analytics, regression analysis and your first forays into generative AI.

ClearML Announces Extensive New Capabilities for Optimizing GPU Compute Resources

To ensure a frictionless AI/ML development lifecycle, ClearML recently announced extensive new capabilities for managing, scheduling, and optimizing GPU compute resources. This capability benefits customers regardless of whether their setup is on-premise, in the cloud, or hybrid. Under ClearML’s Orchestration menu, a new Enterprise Cost Management Center enables customers to better visualize and oversee what is happening in their clusters.